At GIRO, we’re in the business of saving our clients time and money. Our optimization software helps some of the world’s foremost public transport agencies and postal/parcel organizations maximize the efficiency of their services while minimizing costs, by solving tough problems to unlock significant savings. In more than forty years in the business, we’ve developed – and keep developing – extremely powerful algorithms that help our clients achieve their goals.

Can you do it right and do it fast?

Quite often, the optimization problems that our clients must solve are extremely difficult mathematically, categorized as “NP-hard” problems. What this means in practice is that algorithms generally can’t produce solutions to them that are demonstrably optimal, or at least not without running for a very long time.

Two sayings come to mind: “time is money” and “if it’s worth doing, it’s worth doing right”. These don’t always go well together if “doing it right” costs you too much time.

High-quality solutions that produce substantial efficiency gains and cost savings can often be obtained for these NP-hard problems by heuristic methods, which apply rules less strictly than algorithms. When these methods are sophisticated enough, high-quality solutions can be obtained in a matter of minutes and trying to squeeze out the last drop of savings by going the full algorithmic route won’t produce much additional benefit. But sometimes going beyond a high-quality solution to an optimal one will produce such great benefits that we must invest the time to run the algorithms for NP-hard problems. To maximize the benefits, we want to run the algorithms as quickly as possible. Parallel processing is one way to achieve this.

To maximize the benefits, we want to run the algorithms as quickly as possible. Parallel processing is one way to achieve this.

Many hands make light work – for the right work

Suppose you want to paint a room with four walls of the same size. If you get three friends over to make a foursome and each of you tackles one wall, you can expect to finish four times faster than if you painted all the walls on your own. In other words, the gain in speed is linear, which is ideal because the gain equals the number of resources used: using four painters gets the job done four times as quickly. The same principle applies to software, which can process tasks in parallel when several processors are available.

Parallel processing involves breaking down a computing task into subtasks that can be performed at the same time by different processors so that the whole task is completed more quickly. However, not all tasks are suitable to be broken down for parallel processing. The overall gain in computing speed will depend on what proportion of the program’s tasks can be subdivided to be performed in parallel and whether there are any overhead costs associated with subdividing them.

Going back to our house-painting example, the work of painting lends itself to parallel processing by dividing the work between several painters. However, parallel processing is not the way to go if you want to thank your friends for their hard work by sharing a pizza with them. Slicing it in four and using four ovens to bake the slices at the same time might cook your pizza slightly faster, but it won’t be anything like four times as fast. When you factor in the costs to buy the additional ovens and pay for the energy they use, your investment in parallel processing to cook your pizza this way just won’t pay off.

However, not all tasks are suitable to be broken down for parallel processing. The overall gain in computing speed will depend on what proportion of the program’s tasks can be subdivided...

Will that be processing or threading?

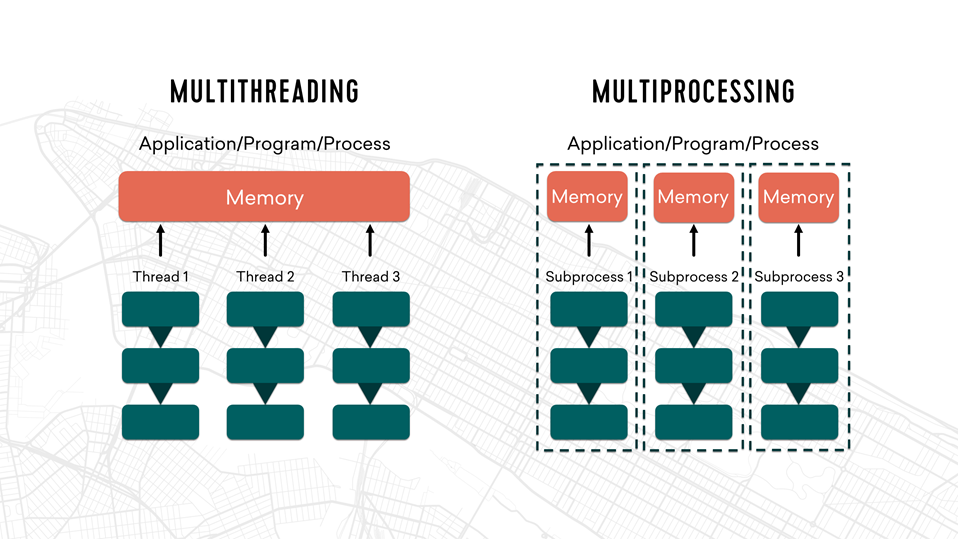

When parallel processing is the way to go, two approaches are available: multiprocessing and multithreading. With multiprocessing, several processes are run at the same time on different processors. With multithreading, a single process is divided into threads that are programmed to run concurrently within the “context” of that process. Each method has its pros and cons. Multiprocessing uses more resources, but it has the advantage of requiring hardly any change to existing coding and being easier to implement. Multithreading is usually harder to implement and increases the potential for problems caused by race conditions, which can occur if access to a shared resource is not properly controlled. However, because the different threads do share the same resources, such as RAM, multithreading has a smaller technology footprint.

We have quite a lot of experience with both these approaches at GIRO. Some of our HASTUS software modules have been using multiprocessing for more than 25 years and we also started using multithreading about 10 years ago.

One module that has a long history of using multiprocessing is our CrewOpt module for crew-schedule optimization. The CrewOpt task scheduler is a parallelization engine that launches all the optimization tasks for the subproblems of a large-scale scheduling problem on different processors and solves them at the same time. The task scheduler can also be used for multiple-strategy processing, “cloning” the same problem to be solved using different parameters all at once. The parameters that produce the best solution are then saved. Using the CrewOpt task scheduler provides an almost linear gain in processing speed versus testing each strategy one after the other.

More and more of our products are also using the multithreading approach to run their algorithms more quickly. Many of them use a multithreaded version of the CPLEX high-performance mathematical programming solver. The use of multithreading for our Customer Impact Simulator and ReachMap algorithms was a big help to our client LA Metro in their NextGen project, saving them a lot of time when they were reimagining their entire bus network and needed to run multiple simulations quickly and accurately.

The cloud gives an extra boost

More and more of our clients are choosing to host their HASTUS installations in the cloud rather than maintaining them on their own infrastructure. This opens up new possibilities for parallel processing, and for running algorithms on virtual machines (VMs) of different sizes according to each algorithm’s resource requirements. It isn’t always cost-effective to expand your on-premises system to cope with occasional extra processing needs. The cloud makes it easy to pay for extra processing power only when you need it, which is especially useful when the multiprocessing approach calls for extra resources. We see a lot more parallel processing in our future, as we expect most of our clients to move to the cloud.

More and more of our clients are choosing to host their HASTUS installations in the cloud rather than maintaining them on their own infrastructure. This opens up new possibilities for parallel processing...

About the authors

Charles Fleurent, Senior Director, Optimization Algorithms

In his 25-year career at GIRO, Charles Fleurent has led the development of the algorithms that power GIRO’s software solutions to optimize public transit planning, scheduling and operations. He spearheads the company’s research efforts, both internally and in collaboration with various research institutes. In 2021, he received the Canadian Urban Transit Association’s Leadership Award for Excellence, recognizing his contributions to advancements in public transit optimization, especially his involvement in research on the use of artificial intelligence to optimize crew scheduling. He also serves as senior editor for the scientific journal Public Transport: Planning and Operations published by Springer.

Loïc Bodart, Assistant Director, Planning-Routing Algorithms

Loïc Bodart leads the team developing the algorithms that power GIRO’s software solutions to optimize planning and routing of public transport and parcel/postal services. He began his career in software development optimizing airline crew management. In close to 15 years at GIRO, he has filled a succession of roles in software development which have perfected his expertise. He holds a master’s degree in Operations Research from Polytechnique Montréal.

Véronique Bouffard, Assistant Director, HASTUS Algorithms

Véronique Bouffard leads the team developing the algorithms that power GIRO’s software solutions to optimize scheduling and operations management of all modes of public transport by road and rail. She has built her expertise in a career of close to 20 years at GIRO with a solid focus on algorithmic development. She holds a master’s degree in Computer Science from Université de Montréal.